The Generative Turn: On AIs as Stochastic Parrots and Art

A clunky title, but, I trust, not a clunky essay.

This is part of an emerging project that I am currently calling “A Skeptic’s Guide to Art with AI.” I have a third essay nearly done (on AI and Plagiarism), a fourth (on AI and fakes), and a fifth (on AI, Death, and Hauntology), substantially drafted. The plan is to publish one each Monday throughout the next month.

Each essay addresses a familiar complaint with AI and explores its relationship to culture throughout history, as well as the potential uses in art. When all that is wrapped up by the start of the summer, I will have a little book—think of Semiotext(e)’s Foreign Agents series—ready to publish. Given the pace of change in AI, I don’t imagine that waiting for a press would make sense and expect to self publish, but if someone has a concrete proposal, I will gladly listen.

I do hope to return to the Florilegium—my series of posts about native plants, art, and design—maybe even this week, but my native plant bandwidth is tied up with being president of the Native Plant Society of New Jersey and, well, on tending the garden.

As always, this post is up on my web site, https://varnelis.net/works_and_projects/the-generative-turn-on-ais-as-stochastic-parrots-and-art/ and all I ask is that if you enjoy this post, you like it and share it. Comments are welcome too.

Large Language Model (LLM)-based Artificial Intelligences have been derided for hallucinating—a topic that I addressed in my last essay, “The New Surrealism. On AI and Hallucinations,”—and labeled “stochastic parrots,” supposedly incapable of generating authentic meaning beyond remixed texts. Coined by researcher Emily Bender and her colleagues, the term “stochastic parrots” describes language models as systems that statistically predict and reproduce patterns in language without genuine understanding. And yet, anyone familiar with contemporary LLMs—particularly recent, sophisticated models like ChatGPT 4.5 or Claude Sonnet 3.7—knows that these “parrots” easily pass a simple Turing Test, Alan Turing’s hypothetical test of a machine’s intelligence to see whether a person interacting with it can reliably distinguish the computer from a human. These convincingly human-like interactions underscore a deeper truth: predictability is fundamental to human society itself.1

From routine greetings to rituals marking life milestones—weddings, graduations, funerals—our shared existence thrives on stable expectations. Indeed, the stress that many of us—including the markets—feel in this first spring of the second Trump administration is caused precisely by the executive branch’s erratic, unpredictable behavior. Predictability is not merely useful; it is essential, ensuring interactions proceed smoothly without continuous negotiation. Every culture, whether traditional or contemporary, anchors itself in repeated rituals, social scripts, and familiar stereotypes. Consider the handshake, a simple gesture instantly communicating respect, trust, or greeting through mutual recognition. In Japan, a bow serves this function; in France, a kiss on each cheek. Such rituals persist because their predictability facilitates social cohesion. Without stable expectations, human interactions would require constant explanation and renegotiation, exhausting participants and weakening social bonds. Predictability permeates all levels of societal structure up to international relations. Diplomatic protocols, treaties, and international laws function precisely because nations rely on mutual expectations of predictable behavior. The uncertainty created when states deviate from established norms—as seen in trade wars, diplomatic tensions, or sudden military escalations—generates global anxiety, economic instability, and geopolitical friction.

Sociologist Erving Goffman, in his influential work The Presentation of Self in Everyday Life, describes human behavior as a series of performances carefully regulated by implicit scripts. These scripts guide everything from greetings between friends to complex professional interactions. Goffman suggests that without them, social life would descend into chaos. Individuals intuitively understand these roles, shifting seamlessly from one performance to another based on context: professional, familial, or social. Predictable interactions reassure participants by clarifying expectations, permitting people to anticipate and trust each other’s behaviors, reducing cognitive and emotional strain, fostering stability and cohesion within society.2

Similarly, anthropologist Pierre Bourdieu expands this notion through his concept of habitus. For Bourdieu, habitus represents the internalized structure of social life: individuals embody deeply ingrained habits, dispositions, and tastes acquired through repeated interactions within their specific cultural contexts. Habitus shapes behaviors, preferences, and perceptions so thoroughly that they become second nature, enabling individuals to intuitively navigate social environments without conscious effort. But because habitus includes deeply internalized habits, tastes, and expectations formed by one’s social environment, individuals unconsciously accept and replicate existing social structures, including hierarchies and power relations. In other words, people unknowingly reinforce patterns of privilege or disadvantage simply by acting in familiar, predictable ways. Predictability thus not only facilitates social cohesion but also perpetuates existing hierarchies and norms by normalizing and embedding them deeply within individuals’ perceptions and practices, ensuring social stability through repetition rather than conscious coercion.3

In stabilizing society, predicatability also fosters stereotypes. First used in its modern sense by journalist Walter Lippmann in Public Opinion (1922), the word “stereotype” originally referred to mental images—simplified, predictable perceptions enabling quick social judgments. Even if stereotypes are commonly seen as leading to prejudice or discrimination, they nonetheless perform critical functions within society by enabling rapid cognitive processing in uncertain situations, providing shorthand classifications that allow individuals to navigate social complexity swiftly. For instance, professional stereotypes—expectations about doctors, lawyers, or teachers—guide interactions within these roles, establishing clear norms that enable efficient communication and professional conduct. Even resistance to stereotypes follows predictable patterns; the avant-garde artist deliberately violating expectations is, paradoxically, fulfilling another recognizable social role.4

This understanding of predictability’s role in human society offers a useful lens for reconsidering the critique of AI as merely “stochastic parrots.” If human communication and creativity themselves operate through pattern recognition, recombination, and contextual prediction, then the distinction between human and AI generation becomes less absolute. Both rely on absorbing patterns from their environment and producing variations that remain recognizable within specific contexts. The distinction lies not in the fundamental process but in the degree of consciousness, intention, and embodied experience that shapes the patterns being recognized and reproduced.

Art itself has always existed in this tension between repetition and innovation. Repetition lies at the core of all artistic practice—the Renaissance master learning through repetitive copying, the jazz musician repeating standard progressions until they become second nature, the novelist absorbing and repeating literary conventions. Repetition is not merely a pedagogical tool but the fundamental substrate of creativity itself. Through repetition, the artist internalizes traditions deeply enough to transform them. Through repetition, audiences develop the literacy to recognize both convention and deviation. Through repetition, cultural forms evolve and mutate across generations. What we celebrate as genius is often the capacity for repetition with a difference—the ability to repeat familiar patterns with just enough variation to create novel meaning. In this light, the “stochastic parrot” critique misses how thoroughly repetition underpins human art-making itself, and how creativity emerges not from originality ex nihilo but from the subtle modulation of repeated patterns.

The very predictability that stabilizes culture also enables innovation: this paradox lies at the heart of creative activity. Novelty emerges not through spontaneous, unconditioned invention but through subtle departures within structured repetition. Gilles Deleuze, in his pivotal text Difference and Repetition (1968), elucidates this dynamic. Deleuze argues against the misconception that repetition is mechanical and sterile. Instead, he insists repetition is inherently creative, each iteration introducing small yet meaningful variations. He distinguishes mechanical repetition—identical reproduction, like photocopying—from productive repetition, in which subtle divergences accumulate into genuine innovation. As incremental variations accumulate, genuine difference emerges. Creativity occurs precisely in the space of tension between the expected (predictable repetition) and the unexpected (subtle variation). Far from inhibiting innovation, predictability thus serves as its very foundation. Deleuze identifies this as the core mechanism underlying genuine innovation—whether in art, science, literature, or society.5

Repetition as a fundamental cognitive act predates humanity itself. Birdsong relies on familiar, established patterns with subtle variations in notes, pitch, or sequence. Whale song, too, consists of repeated phrases and themes that evolve continuously and dramatically over time. Consider the emergence of humanity, which we glimpse so vividly in the cave paintings at Chauvet Pont d’Arc (starting at 37,000 BPE) and Lascaux (starting at 22,000 BPE)—two artistic sites nearly as distant from each other in time as we are from Lascaux today. Both sites manifest strikingly consistent visual languages, demonstrating predictable, structured representations of animals and symbols. Both sites were inhabited for thousands of years, a fact that in itself, is staggering to contemplate. Yet, far from diminishing their cultural significance, the predictability of this work demonstrates how human creativity is established within tradition and repetition.

Classical and medieval cultures explicitly acknowledged tradition as essential to creativity. Artists and scholars operated within established frameworks, regarding innovation as the careful refinement of inherited forms. During the Renaissance, often associated with the celebration of individual genius and divine inspiration, artists deeply relied on repetition and established conventions—perspective, anatomy, classical motifs—as scaffolding. Inspiration did not arise from unstructured impulse but flourished precisely within the constraints of recognized tradition. In music, composers such as Bach and Beethoven famously employed iterative variations on simple themes, each repetition pushing the motif further until something startlingly original emerged. Every artist, every thinker, every cultural producer operates within inherited conventions, echoing and altering familiar patterns to create meaning anew. Accomplished work is itself produced within the context of years of study, usually within long-established, predictable systems such as music theory.

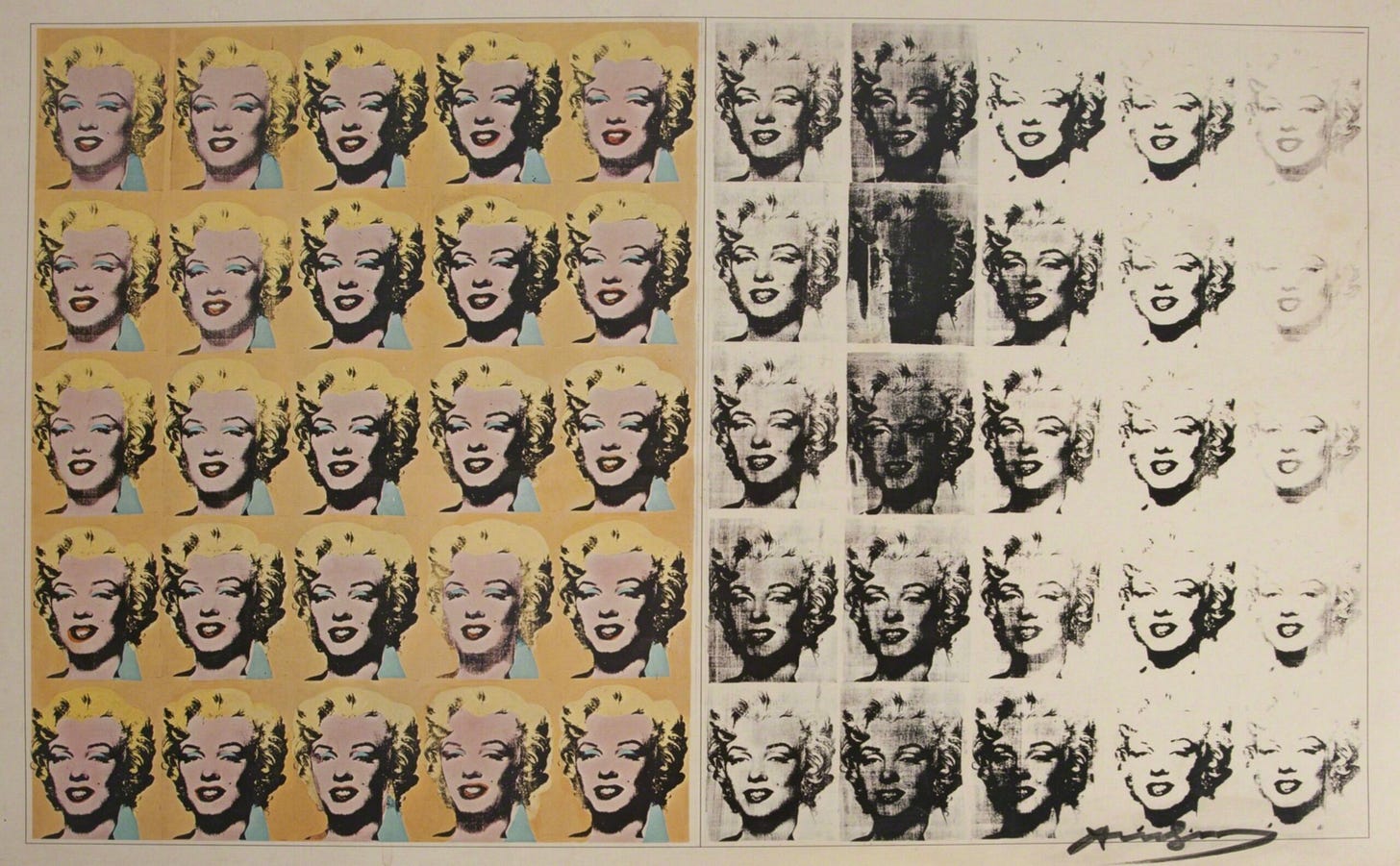

Thus, when modern avant-garde movements emerged, they did not abandon predictability; they relied upon it to heighten the impact of their provocations. Movements such as surrealism and dada strategically employed known conventions to disrupt expectations. Marcel Duchamp’s infamous Fountain (1917)—a common urinal presented as art—achieved its shock precisely by repeating familiar objects in unfamiliar contexts. Repetition set the stage; deviation created the effect. Or consider how postwar avant-garde artists explored repetitive techniques. Andy Warhol’s silkscreen works embodied this principle perfectly, creating endless repetitions of cultural icons and consumer products. In his renowned Marilyn Diptych (1962), Warhol reproduced the actress’s image fifty times across the canvas—twenty-five in vibrant color, twenty-five in fading black and white. The repetition itself becomes the subject, highlighting how mass media’s endless reproduction simultaneously immortalizes and empties images of meaning. Similarly, in his “Death and Disaster” series, Warhol repeated newspaper photographs of car crashes and suicides, the mechanical repetition simultaneously numbing viewers to tragedy while paradoxically intensifying its horror. Each repetition subtly differs from the last—variations in ink density, alignment, and color—creating a visual meditation on how mechanical reproduction transforms experience. Through these works, Warhol demonstrated how repetition is not merely reproduction but a transformative process, generating profound new meanings through the accumulation of subtle differences.

Understanding society and creativity as predictive helps us grasp how generative AI systems echo deeply human cultural processes. I suggested at the outset of this essay that, at heart, LLMs function as sophisticated statistical prediction machines. Given a phrase—”It was a dark and stormy…”—the AI calculates the most probable continuation (“night”) by analyzing billions of instances of textual data. Yet this process is not strictly deterministic. Instead, it operates probabilistically, meaning each prediction is shaped by subtle fluctuations and contextual nuances. AI’s predictions are never mechanically identical; they are always iterative reinterpretations of linguistic history. The model generates not only predictable continuations but also unexpected divergences—sometimes subtle, sometimes radical. Critics and researchers label these divergences “hallucinations,” often viewing them as technical flaws, although the hallucinations—like human dreams—are a source of creativity for the AIs, embodying precisely the generative logic Deleuze articulated: structured repetition producing meaningful differences.

Diffusion models used for AI image generation use a similar predictive logic. Rather than predicting the next word or phrase, these systems predict the gradual emergence of coherent images from initial random noise. Through repeated, iterative steps, diffusion models systematically clarify visual forms by predicting and reducing noise incrementally. Each step is probabilistic, guided by learned patterns derived from extensive datasets of existing images. Just as LLMs generate meaningful text through slight divergences in their statistical predictions, diffusion models yield artistic novelty through controlled randomness. The structured, iterative process—oscillating between predictability and noise—allows the emergence of compelling visual imagery, ranging from convincingly realistic portraits to evocative, dream-like abstractions. Again, we find creativity emerging not from randomness alone, but from a deliberate, generative interplay between predictable structures and unpredictable deviations.

III

1. Take a piece of paper and write down a random word.

2. Read the word out loud.

3. Write down a sentence that incorporates the word you just read.

4. Read the sentence out loud.

5. Repeat steps 1-4 until you feel satisfied with the result.

VIII

1. Choose a cat to be the performer.

2. Place the cat in a comfortable space with plenty of room to move around.

3. Allow the cat to explore its surroundings and become familiar with the space.

4. Observe the cat’s movements and vocalizations, and listen for any sounds or patterns that emerge.

5. As the cat moves and makes sounds, use a microphone or other recording device to capture its vocalizations and movements.

6. Use the recorded sounds to create a series of musical compositions that incorporate the sounds of the cat.

Kazys Varnelis, 20 Subroutines for Humans Made by a Computer, 2022

The generative approach pioneered by mid-20th century artists offers perhaps the most direct historical precedent for today’s AI systems. One of the most influential pioneers was composer John Cage, whose landmark work, Music of Changes (1951), explicitly relied on chance operations determined by the ancient Chinese divination text, the I Ching. Crucially, Cage did not abandon composition entirely to random chance. Instead, he devised intricate procedures and rules, repeatedly applying chance-generated decisions to every musical parameter—pitch, rhythm, dynamics, duration. The resulting performances were thus simultaneously predictable (structured by rules) and unpredictable (generated by chance). Cage understood perfectly Deleuze’s generative logic: structure and repetition establish conditions within which genuine innovation emerges unpredictably. His music continually surprised listeners precisely because it repeated a clearly defined generative procedure, with each iteration subtly and meaningfully different.

Composers such as Brian Eno moved generative music increasingly toward explicit algorithmic practices in the late 20th century. Eno’s groundbreaking “generative music” created systems of musical production defined by fixed rules or algorithms that continuously yielded new variations. In compositions like Music for Airports(1978), Eno programmed systems of overlapping tape loops and repetitive sequences. While each loop was predictable in itself, their interactions produced constantly shifting and novel auditory experiences. The music was simultaneously repetitive (structured loops) and unpredictably evolving (variations through algorithmic combination). Eno captured precisely Deleuze’s generative vision, arguing explicitly that repetition and structure do not constrain creativity but rather create conditions necessary for meaningful novelty.

This generative approach spread across artistic disciplines. Visual artists, inspired by Cage and Eno, adopted algorithmic systems to explore repetition’s creative possibilities. Vera Molnar, one of the pioneers of computer-generated art, produced works based on algorithmically repeated variations of geometric shapes. While each repetition was controlled by predefined rules, tiny programmed deviations ensured continual, subtle differences between iterations. Similarly, Sol LeWitt’s conceptual art pieces began as instructions—rules for execution—that could be repeated endlessly by different people, at different times, in different spaces. Each instantiation of LeWitt’s instructions differed slightly, reinforcing the generative logic that repetition does not produce identical copies but inevitably creates meaningful differences.

Generative art thus illustrates how repetition can become the core medium of creative expression. Far from constraining artists, rules, algorithms, and structured repetitions enable innovative exploration of themes and possibilities that otherwise would remain inaccessible. Each iteration becomes an experiment, its outcomes unpredictable precisely because they emerge from the structured repetition of defined systems. AI image generators lend themselves to this flamework, frequently making four images as a default—beginning with random numerical seeds that determine the initial pattern from which the AI builds. This approach allows artists to generate multiple variants simultaneously, comparing outcomes and selecting directions for further exploration. By reusing the same prompt with either new random seeds or by deliberately maintaining the same seed across multiple generations, artists can produce hundreds or even thousands of iterations, methodically refining their vision until a satisfactory result emerges. This process transforms the act of creation into a collaborative dialogue between human intention and algorithmic interpretation. Cage, Cornell, Eno, Molnar, and LeWitt all understood this paradox: structured repetition and generative unpredictability are not opposing terms, but interdependent conditions of genuine creativity.

These historical practices offer crucial context for understanding contemporary AI art systems. When we frame LLMs or image generators as “stochastic parrots,” we overlook their deep continuity with established generative traditions. Like Cage consulting the I Ching or Eno layering asynchronous tape loops, AI systems deploy structured repetition across vast datasets, producing variations that are at once predictable (drawn from training data) and novel (emerging from complex interactions). The stochastic operations of AI closely resemble those that made aleatory music or generative ambient works radical in their time.

AI-generated artifacts reflect human linguistic and cultural patterns, mirroring entrenched societal expectations and assumptions. Each output emerges from the sedimented repetition of past human expression. In this sense, AI functions less as a creator than as a mirror—revealing the biases, desires, anxieties, and mythologies embedded in its source material. Critics who denounce AI-generated imagery as “racist” misidentify effect for cause: the model does not invent prejudice; it exposes the structures that already exist.

The launch of Google Gemini’s image generator illustrated this vividly. In an effort to correct perceived racial bias, the model was tuned for enforced representational diversity. The result: grotesque anachronisms such as Asian English monarchs or, notoriously, Black Nazis. These were not expressions of machine malice but symptoms of a misguided attempt to overwrite probabilistic patterning with ideological intention. The surreal outputs were not bugs—they were faithful to the data and incoherent only in light of contradictory demands placed upon them. If the episode revealed anything, it was the underlying surrealism of the cultural archive itself.

Indeed, if in my last essay, I described how generative AI can produce a new surrealism, we see her an additional digital equivalent to surrealist practices in its resemblance to automatic writing, which André Breton famously described as “pure psychic automatism.” Just as surrealists sought creative potential in spontaneous, subconscious associations free from rational control, AI language models generate outputs through automated processes free from explicit human intent. The unpredictable linguistic connections that result—sometimes logically flawed but poetically intriguing—parallel surrealist attempts to bypass conscious rationality. Generative AI, guided by probabilistic algorithms rather than neurons, extends surrealist logic into the digital realm, recombining existing cultural patterns into unexpected forms.

What if we imagine human-AI interactions not as diminishing human creativity but as generative processes akin to Cage’s dialogue with chance, Eno’s generative loops, or LeWitt’s instructional repetitions? What if generative AI is not merely a tool but an active cultural interlocutor? Artists, writers, and designers already intentionally explore AI-generated hallucinations and linguistic variations to stimulate creativity.

In this light, generative AI is not a radical break from human creativity but rather clarifies and amplifies cultural processes always at play, explicitly revealing how repetition, predictability, and variation dynamically produce meaning. Deleuze’s theoretical insights and generative art’s practical explorations present AI as fundamentally human—a computational extension of society’s inherent creative logic. Far from constraining creativity, structured repetition becomes a catalyst for thoughtful critique and conscious innovation. Artists and intellectuals can thus use AI not as a replacement but as a creative collaborator, embracing its generative potential to expand rather than diminish cultural imagination.

This collaboration reshapes conceptions of authorship, originality, and authenticity. Instead of the solitary genius traditionally celebrated by Western culture, creativity emerges explicitly collaborative, iterative, and distributed between humans and machines. Authorship becomes an intentional dialogue: humans provide conceptual direction and editorial judgment, while AI contributes unexpected variations drawn from vast cultural datasets. Creativity thus evolves away from solitary invention toward a practice of coordination, curation, and generative experimentation.

The stochastic parrot, it would seem, does have a lesson for us—but it is not that LLMs are unintelligent; rather, it is that human creativity itself is inherently generative. We are all, to a degree, stochastic parrots. If learning to work with machines and computers compelled earlier generative artists, learning—and indeed inventing—new forms of collaboration with AI is the task awaiting the generative creators of our era.

1. Emily Bender, Timnit Gebru, Angelina McMillan-Major, and Shmargaret Shmitchell, On the dangers of stochastic parrots: can language models be too big?, Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, March 2021, https://dl.acm.org/doi/10.1145/3442188.3445922. ↩

2. Erving Goffman, The Presentation of Self in Everyday Life (New York: Doubleday, 1956). ↩

3. Pierre Bourdieu, Outline of a Theory of Practice. (New York: Cambridge University Press, 1977). ↩

4. Walter Lippmann, Public Opinion (New York: Harcourt, Brace and Company. 1922) ↩

5. Gilles Deleuze, Difference and Repetition, trans. Paul Patton (New York: Columbia University Press, 1994) ↩